Amazon challenges ‘old-guard’ IBM, Microsoft and Oracle with QuickSight, Kinesis Firehose, Snowball, and new database services. Here’s a quick rundown with cautions for would-be adopters.

Make no mistake: Amazon Web Services (AWS) is doing everything it can to make it easy for enterprises large and small to bring all, or at least part, of their data into its cloud.

At the AWS re:Invent event in Las Vegas on Wednesday, AWS announced a battery of new services designed to lower the cost and simplify the tasks of analyzing data, streaming data, moving data, migrating databases and switching to different database management systems in the cloud. Here’s a quick rundown on five new services with my take on implications for would-be customers.

![@AWS, #Reinvent]()

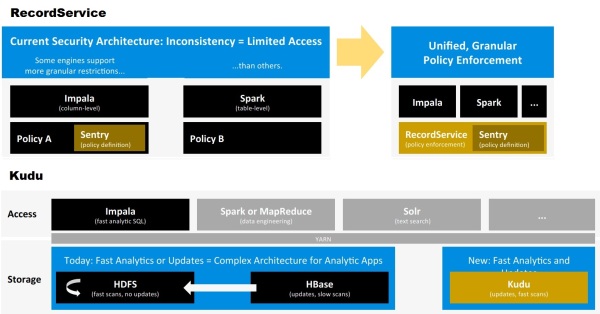

QuickInsight is a data-analysis, visualization and dashboarding suite introduced at AWS re:Invent. It promises ease of use and low costs starting at $9 per user, per month.

Fast to deploy, easy to use, low cost: these are the three promises of QuickSight, which Amazon says will start at $9 per user, per month, “one tenth the cost of traditional BI options,” according to Amazon.

Currently in preview, QuickSight is described by Amazon as follows:

- QuickSight automatically discovers all customer data available in AWS data stores – S3, RDS, Redshift, EMR, DynamoDB, etc. – and then “infers data types and relationships, issues optimal queries to extract relevant data [and] aggregates the results,” according to Amazon.

- This data is then automatically formatted, “without complicated ETL,” and made accessible for analysis through a parallel, columnar, in-memory calculation engine called “Spice.”

- Spice serves up analyses “within seconds… without writing code or using SQL.” Data can also be consumed through a “SQL-like” API by partner tools running on Amazon including Domo, Qlik, Tableau and Tibco-Jaspersoft.

- A tool called “Autograph” automatically suggests best-fit visualizations, with options including pie charts, histograms, scatter plots, bar graphs, line charts and storyboards. You can also build live dashboards that will change in real-time along with the data. QuickInsight will also be supported with iOS and Android mobile apps.

- Sharing options will include “single-click” capture and bundling data and visualizations (not just screen shots) so collaborators can interact with, drill down on, and change visualizations. These can be shared on intranets, embedded in applications or embedded on public-facing Web sites.

MyPOV on QuickSight: Amazon is clearly taking on what it called “old-guard” BI solutions such as IBM Cognos, Oracle OBIEE and SAP BusinessObjects. We’ve already seen more modern, cloud-based alternatives from these vendors, including IBM Watson Analytics, Oracle BI Cloud Service and SAP Lumira Cloud. I expect to see yet more cloud BI and analytics options announced by these three vendors within the next few weeks. Amazon is smart to be moving into BI, analysis and visualization now as incumbents are starting to put all their chips on cloud-based BI and analysis offerings.

If the no-coding, automated visualization capabilities live up to their billing, QuickSight just may compete a bit with the likes of Qlik and Tableau as well as Cognos and BusinessObjects. We’ll also have to see just what you’ll get for $9 per user, per month and just how easy and broad the collaboration options will be. The pricing is in the freemium range where IBM Watson Analytics, Microsoft PowerBI and SAP Lumira Cloud have been establishing cloud beachheads.

If QuickSight has a weakness it’s that it appears to be entirely geared to consuming data that’s in Amazon’s cloud. Amazon said nothing about connecting to on-premises data sources. In fact, the keynote went on to describe many new services (described below) designed to bring yet more data into Amazon’s cloud. In short, QuickSight is not about hybrid scenarios, it’s about analyzing data that lives in the cloud, which will be fine for many, but not all companies.

Kinesis Firehose Handles Streaming Scenarios

Amazon already offered Kinesis Streams, a real-time streaming data option, but that service is aimed at technical users. Kinesis Firehose, available immediately, is intended to make it easier to support streaming scenarios such as mobile apps, Web apps or the collection of telemetry or sensor data (read, IoT).

Instead of writing code, “builders” create an Amazon Kinesis Firehose Delivery Stream in the AWS Management Console.” These streams can then be targeted at S3 buckets or Amazon Redshift tables (with more Amazon storage options to come). Users can specify refresh intervals, chunk sizes and compression and encryption settings.

MyPOV on Kinesis Firehose: This is a welcome refresh and addition to the Kinesis family. Kinesis Firehose seems to add some of the same visual, point-and-click/drag-and-drop, API-based approaches seen in recently introduced IoT suites, such as Microsoft’s Azure IoT suite. Ease-of-use and self-service seem to be the guiding principles for all data and analysis services these days, and Kinesis Firehose brings streaming capabilities closer to business users.

AWS Snowball: Chuck Bulk Data At AWS

Even if you have fat, dedicated pipes (at great cost), it takes a long time to move big data. Amazon has an existing Import/Export service that lets you ship data overnight to AWS on one-terabyte disks, but even that’s a time-consuming can error-prone approach when moving tens of terabytes or more.

![@Amazon, @AWS, #Reinvent]()

AWS Snowball is a shippable appliance designed to move 50 TB at a time to the cloud.

, available immediately, is a PC-Tower-sized appliance in a shipping box that stores up to 50 terabytes at a time. A digital-ink (Kindle-based) shipping label displays your address on the outbound trip and then automatically resets to Amazon’s address once you load data and are ready to send it back via FedEx or UPS.

Data is encrypted as it’s stored in a Snowball, and you can run several Snowballs in parallel if you need to move hundreds of terabytes or more. The service also validates that all data that’s stored on the device is uploaded and readable on AWS storage. The cost per shipment is $200, and Amazon says it’s the fastest and most cost-effective option available for bulk data loading to the cloud.

MyPOV on Snowball: Not much to quibble with here. Amazon has thought through a tough problem and has come up with an easier way to ship bulk data with provisions for security, damage- and tamper-resistant shipping, and fool-proof labeling.

AWS Database Migration Service: The Last Mile

Amazon launched two new services promising “freedom from bad database relationships.” The “old guard has treated you poorly,” said Amazon executive Andy Jassy, who cited lock-in, software audits and fines. So it’s “only natural” that companies are “fleeing expensive, proprietary databases,” he said.

The challenge, admitted Jassy, is that migrations are fraught with risks. Do you try to keep services running and hope you can pull off a seamless transition? Or do you shut down one service and then start another, hoping that you can minimize downtime? Another challenge is migrating from a commercial database, like IBM DB2 or Oracle, and switching to lower-cost, cloud alternative, like Amazon Aurora, PostgreSQL or (just added to AWS as a MySQL-replacement) MariaDB.

The Amazon Database Migration Service will “easily migrate production databases with minimal downtime,” according to Amazon. It’s said ensure continuous replication from target to source for any size database, with tracking and real-time monitoring. The tool takes 10 to 15 minutes to set up, says Amazon, and the cost of the service is about $3 per terabyte.

MyPOV on Database Migration: This is another well-intended service where any improvement offered will be welcome, but Amazon anticipated the major concerns (mine and yours) by also introducing the Amazon Schema Conversion Tool described below. Amazon threw the term “open” around quite a bit, but keep in mind that Amazon RDS, Aurora and RedShift are no less proprietary than DB2 or Oracle. Lower cost, certainly, but lock-in is a factor to consider here, too.

AWS Schema Conversion Tool: The First Mile

Migrating an on-premises database to the cloud (say, MySQL on-premises to MySQL running in the cloud) is hard enough. Migrating from on database management system on-premises (say, Microsoft SQL Server or Oracle) to an alternative DBMS running in the cloud (say, MariaDB replacing SQL Server or Aurora replacing Oracle) is harder still. Amazon acknowledged as much by introducing AWS Schema Conversion Tool.

![@AWS, @Amazon, #Reinvent]()

New AWS Database Migration and Schema Conversion services are both intended to ease database moves, but schema conversion is the tougher task.

Migrating data from one database to another is really the easy last mile. The trickier part is all the preparation work you have to do before the big move. The Schema Conversion Tool is said to “reliably and easily” transform the data from one database type to another, swapping like-for-like tables, partitions, store procedures and more, according to Amazon. “We think we’ll be able to address 80% of changes automatically,” said Jassy. “This will radically change the cost structure and speed of moving from the old world to the cloud world.”

MyPOV on Schema Conversion Tool. Good for Amazon for taking its assistance a level deeper than the Database Migration Service. But practitioners should keep in mind that there will still be plenty of testing and quality-assurance work to do even if the Schema Conversion Tool manages to handle 80% of changes automatically.

Here again we’re talking about a well-intended service wherein any improvement over the status quo will be welcome. Just don’t expect this optimistically named tool to automagically move or swap your DBMS without breaking things in the process. That will certainly be the case when switching from one DBMS to another. Apply the rule of thumb often used in home-improvement projects: Count on it taking twice as long and costing twice as much as your initial estimates.

MyPOV on the Big Picture From re:Invent

Amazon offered an impressive collection of announcements at re:Invent. It also didn’t hurt to have executives from the likes of CapitalOne and GE talking about how they’re consolidating data centers and moving huge chunks of their workloads to AWS – 60% in GE’s case. Keeping things real, it was refreshing to see Amazon acknowledge that many large enterprises (I believe most) will ultimately stick with hybrid cloud approaches. GE, for example, said it’s moving all non-differentiating workloads to AWS while anything related to “company crown jewels” will remain in company-owned data centers.

Where all these new data services are concerned, I think it’s also important for companies to think hybrid rather than putting everything in the cloud. QuickInsight might satisfy many self-service data-analysis scenarios, but that won’t eliminate the need for carefully curated data and analyses, high-value decision-support scenarios and mission-critical business-monitoring tasks. As for putting 100% of your data in the cloud, apply the standard advice to investors and diversify. So maybe you go cloud, but you mix public and private cloud.

The point is to not put all of your eggs in one basket. The Weather Company, for one, has split its B2C and B2B clouds between the AWS and IBM clouds, respectively. Keep competition and the threat of lock-in in mind when crafting a new data-management strategy and making long-term plans for how and where you support BI and advanced analytics for routine versus “company crown jewel” needs.

![]()

![]()